Machine Learning Preprocessing Toolkit: Essential Techniques and Algorithms

1. Feature Scaling:

- Description: Scaling numerical features ensures that they are on the same scale, preventing features with larger magnitudes from dominating the model.

- Algorithms: StandardScaler, MinMaxScaler, etc.

2. Feature Encoding:

- Description: Encoding categorical variables into numerical format is essential for many machine learning algorithms that cannot handle categorical data directly.

- Algorithms: OneHotEncoder, LabelEncoder, TargetEncoder, etc.

3. Handling Missing Values:

- Description: Dealing with missing values is crucial to prevent biased model outcomes and ensure robustness.

- Algorithms: SimpleImputer (mean, median, mode), KNNImputer, IterativeImputer, etc.

4. Data Cleaning:

- Description: Cleaning the data involves removing duplicate records, ensuring data integrity, and improving model performance.

- Algorithms: pandas.DataFrame.drop_duplicates().

5. Outlier Detection and Handling:

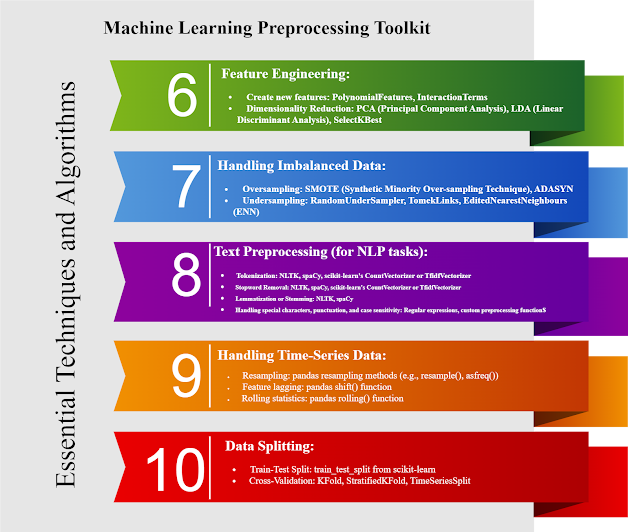

6. Feature Engineering:

- Description: Creating new features or transforming existing ones can enhance the predictive power of machine learning models.

- Algorithms: PolynomialFeatures, PCA, SelectKBest, etc.

7. Handling Imbalanced Data:

- Description: Addressing class imbalance is essential to prevent models from being biased towards the majority class.

- Algorithms: SMOTE, ADASYN, RandomUnderSampler, etc.

8. Text Preprocessing (for NLP tasks):

- Description: Preprocessing text data involves tokenization, removing stopwords, and converting text into a format suitable for machine learning models.

- Algorithms: NLTK, spaCy, scikit-learn's CountVectorizer or TfidfVectorizer, etc.

9. Handling Time-Series Data:

- Description: Preprocessing time-series data involves handling temporal dependencies and ensuring data is suitable for modeling.

- Algorithms: pandas resampling methods, rolling() function, shift() function, etc.

10. Data Splitting:

- Description: Splitting the dataset into training and testing sets allows for model evaluation and validation.

- Algorithms: train_test_split from scikit-learn, KFold, StratifiedKFold, etc.

This toolkit provides essential techniques and algorithms for preprocessing data in machine learning, ensuring that datasets are cleaned, transformed, and prepared for model training and evaluation.

Md. Alamgir Hossain

Senior Lecturer

Dept. of CSE

Prime University

#machinelearning

#preprocessing

No comments:

Post a Comment